???? Using Text data input.

???? Using audio as input.

????️ Using picture or video as input.

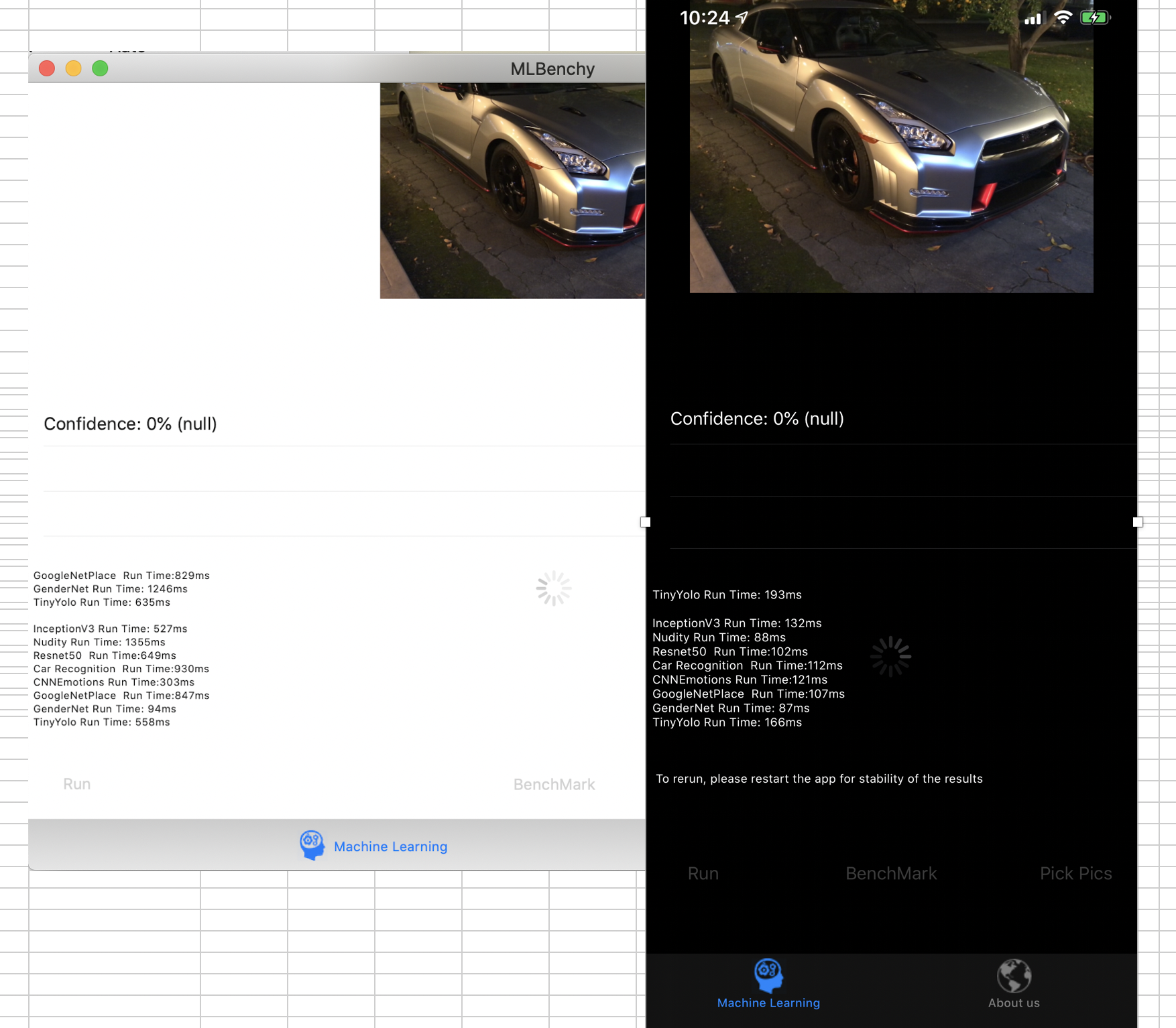

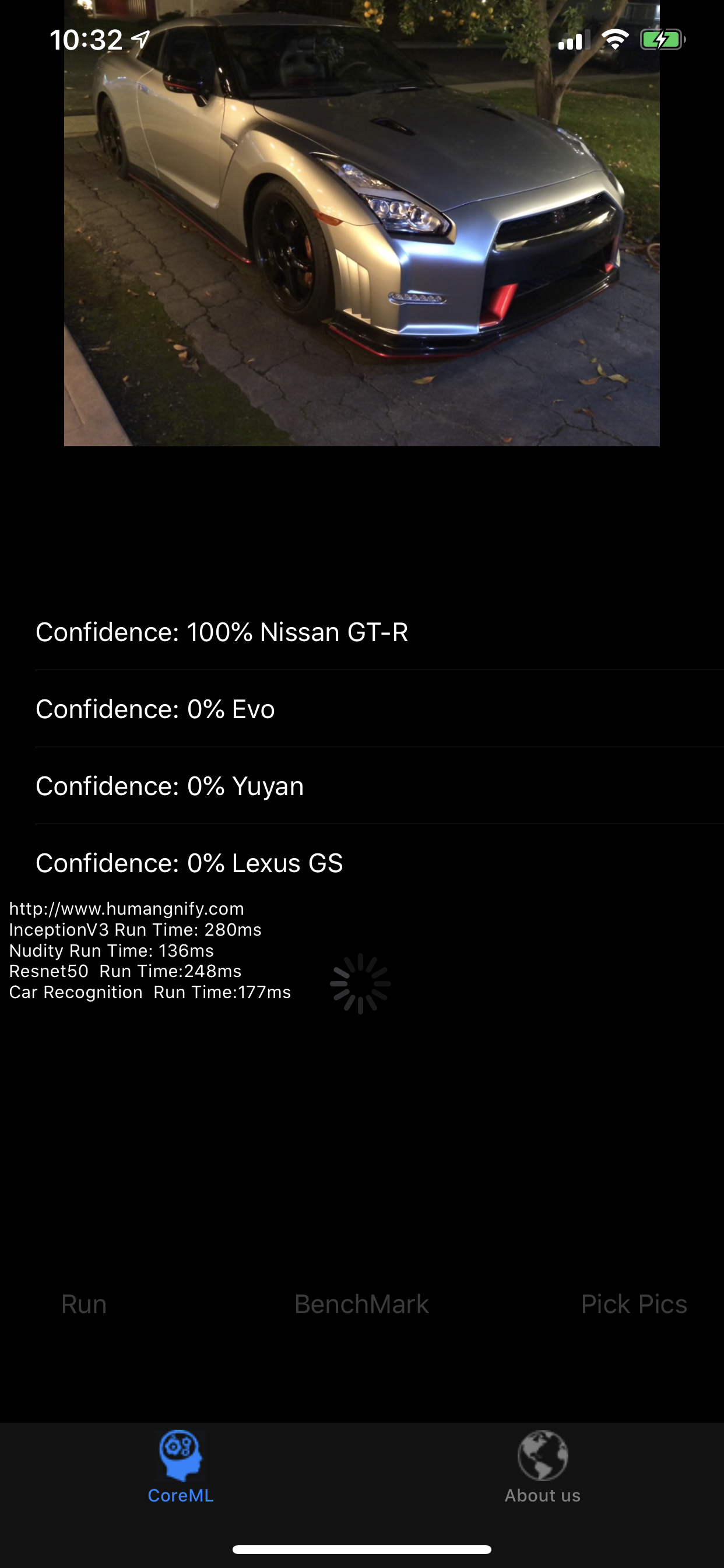

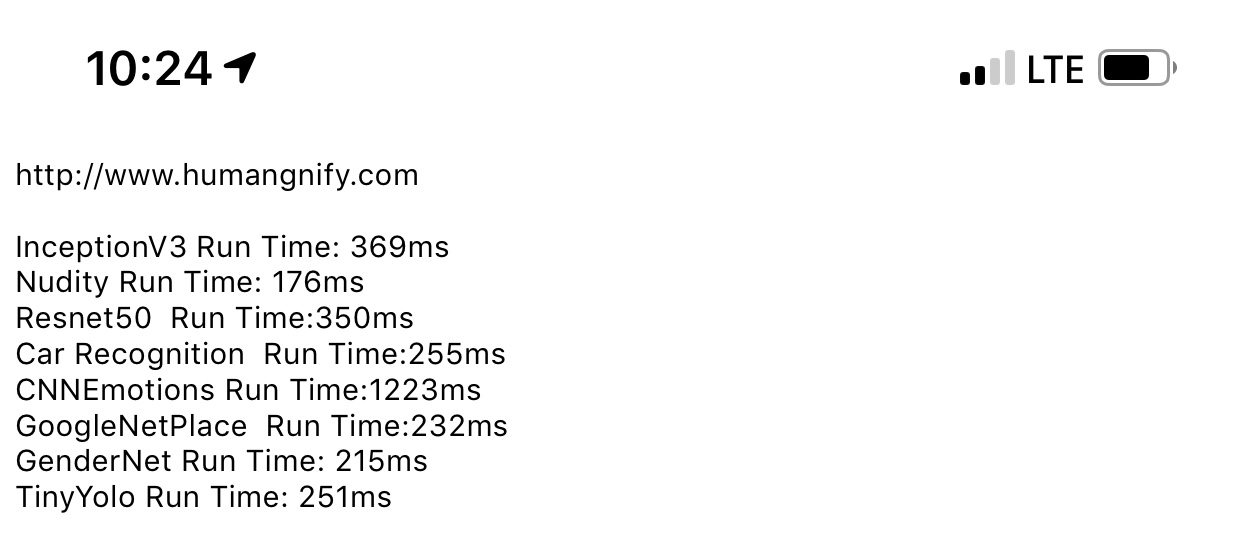

Restnet50????️

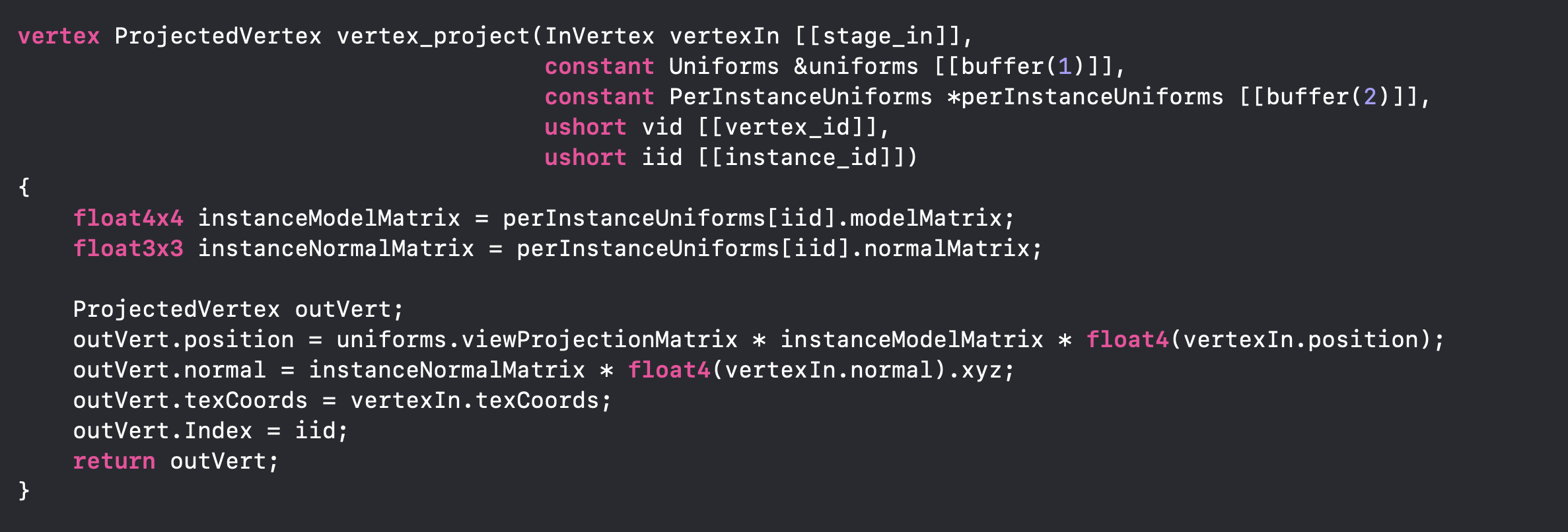

ResNet is a short name for Residual Network. As the name of the network indicates, the new terminology that this network introduces is residual learning.

What is the need for Residual Learning?

Deep convolutional neural networks have led to a series of breakthroughs for image classification. Many other visual recognition tasks have also greatly benefited from very deep models. So, over the years there is a trend to go more deeper, to solve more complex tasks and to also increase /improve the classification/recognition accuracy. But, as we go deeper; the training of neural network becomes difficult and also the accuracy starts saturating and then degrades also. Residual Learning tries to solve both these problems.

What is Residual Learning?

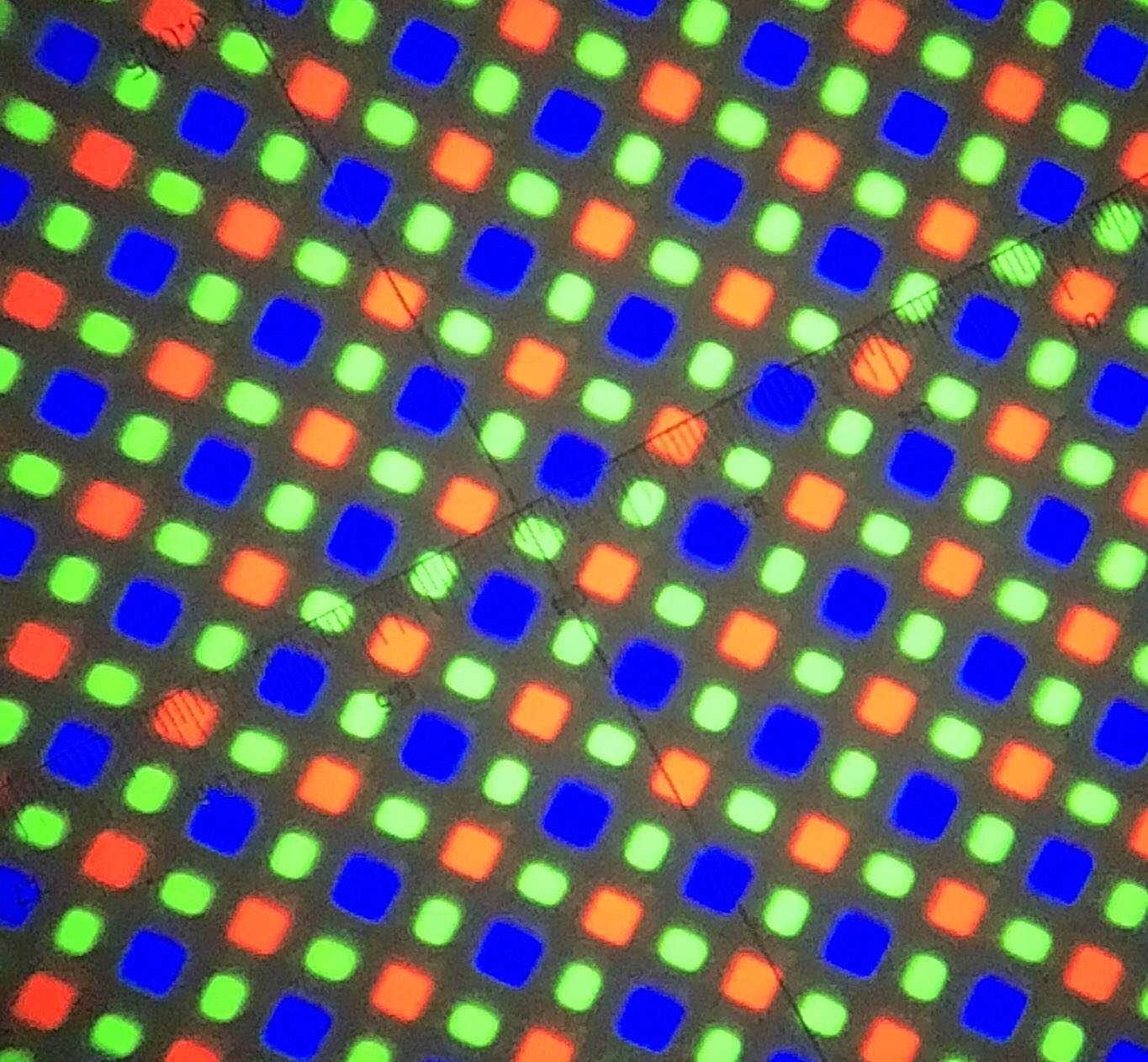

In general, in a deep convolutional neural network, several layers are stacked and are trained to the task at hand. The network learns several low/mid/high level features at the end of its layers. In residual learning, instead of trying to learn some features, we try to learn some residual. Residual can be simply understood as subtraction of feature learned from input of that layer. ResNet does this using shortcut connections (directly connecting input of nth layer to some (n+x)th layer. It has proved that training this form of networks is easier than training simple deep convolutional neural networks and also the problem of degrading accuracy is resolved.

source

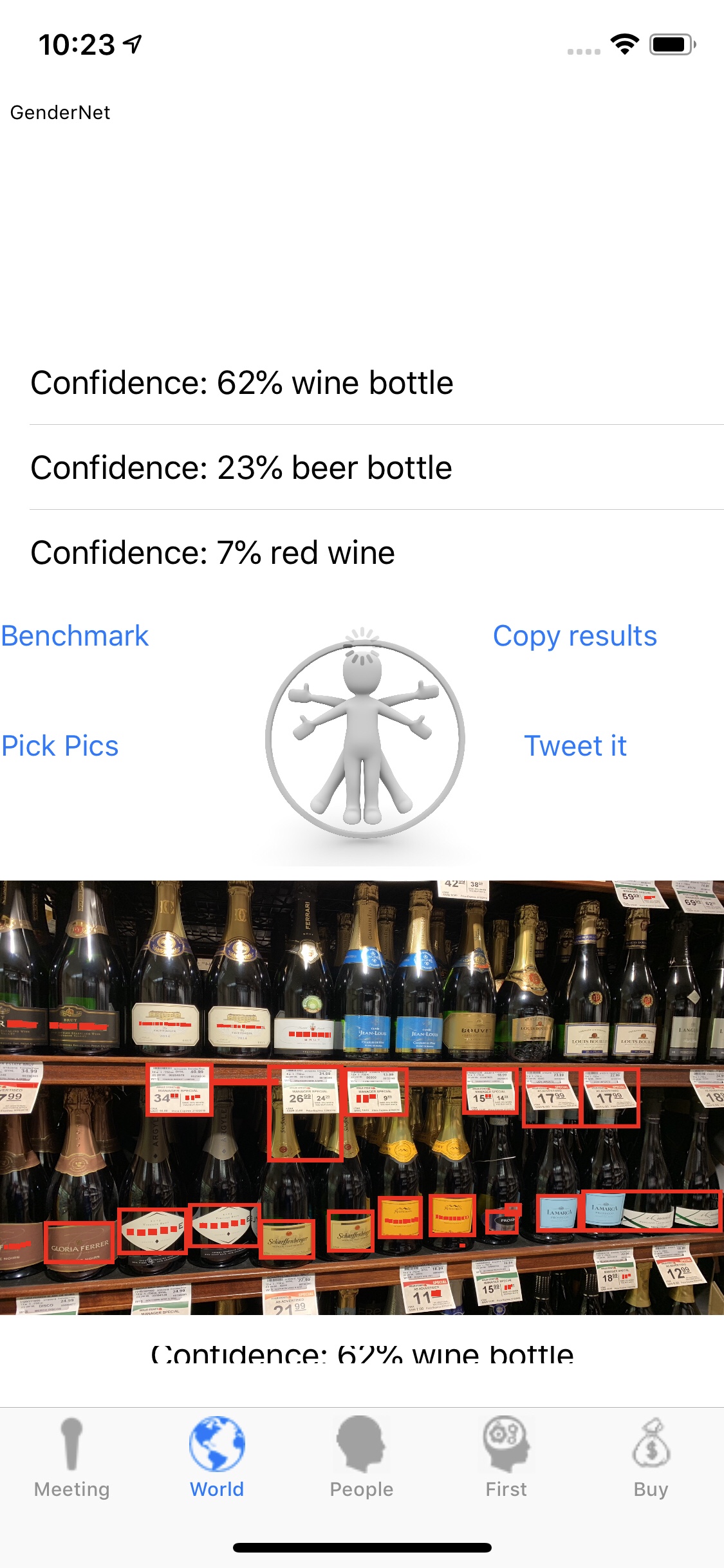

Gender Net ????️

Extract age and gender of a face.

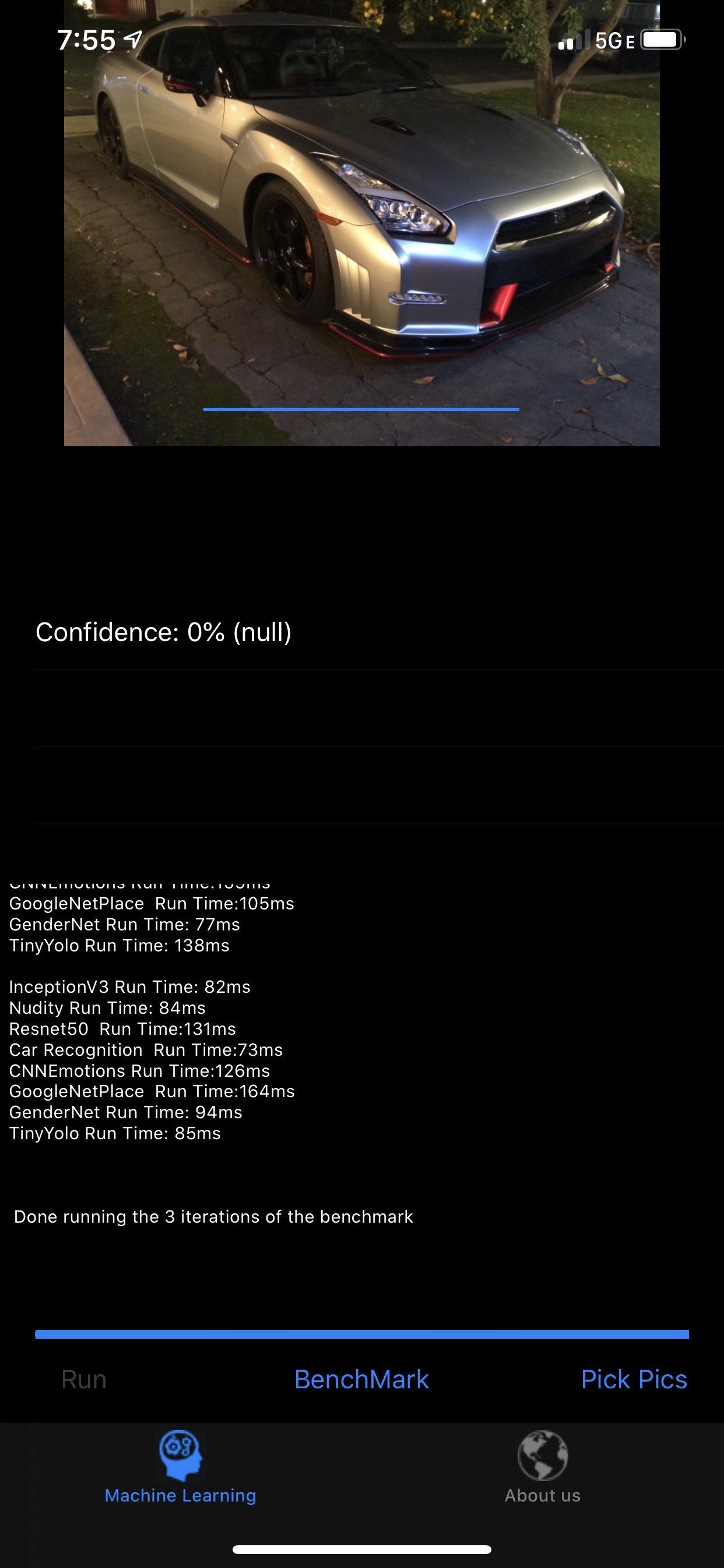

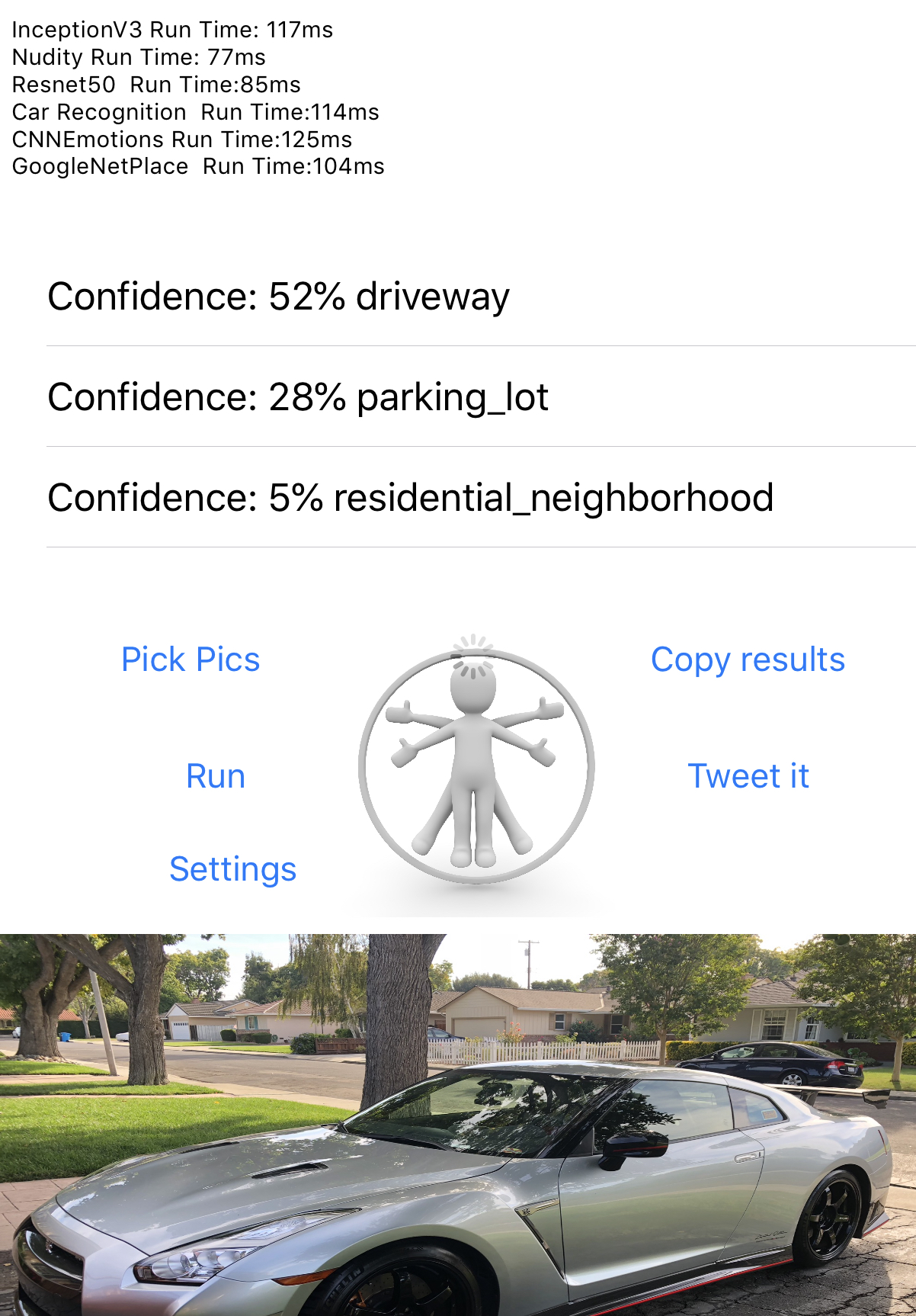

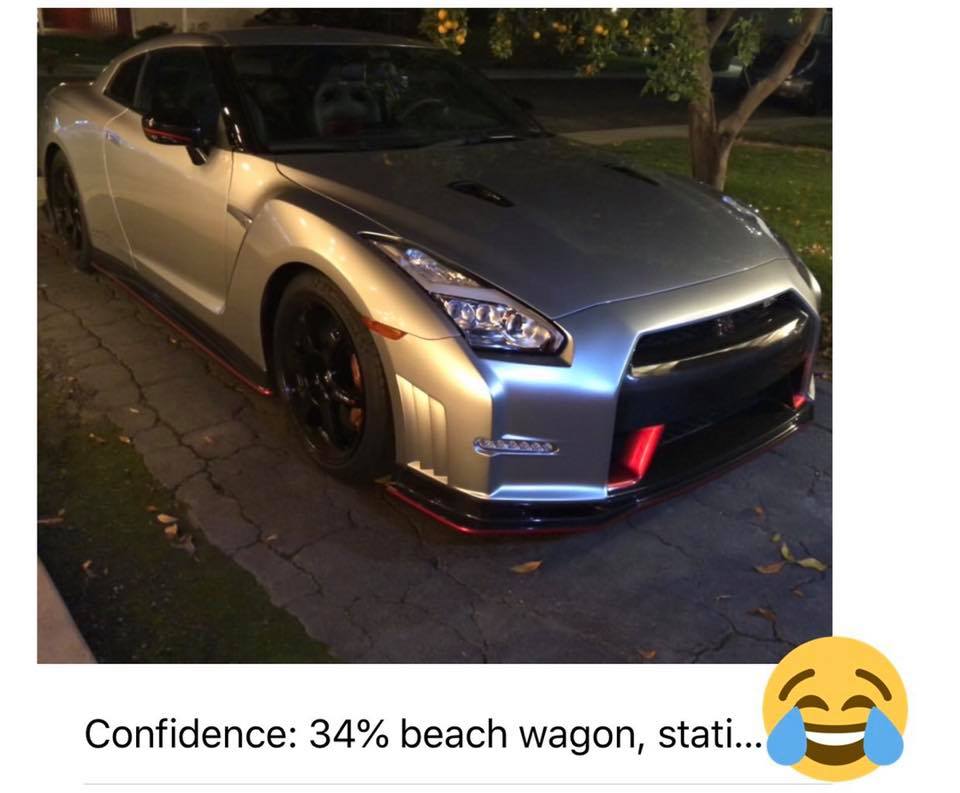

Car Recognition ????️

recognize cars model and brand in USA market.

CNNEmotions ????️

Part of GoogleNet, this one recognize emotion out of a picture and provide an idea of the emotion in the picture.

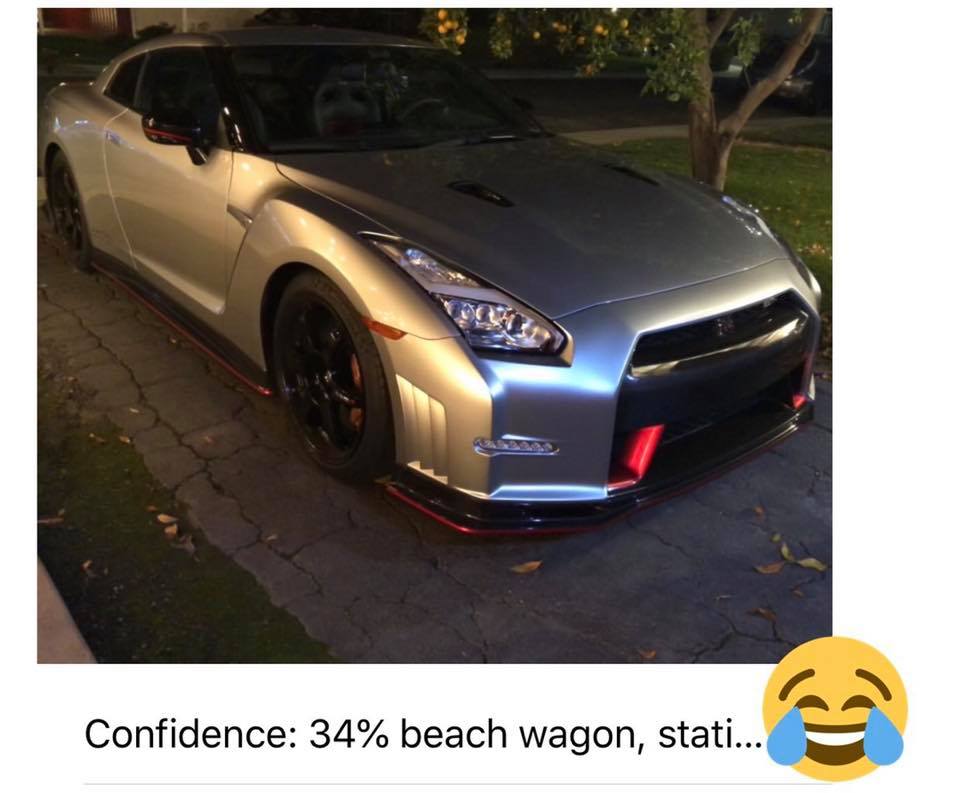

Tweet Classifier????

This one classify how people feel, and what kind of emotions they try to express in a TEXT, using #DNLP.

GoogleNet Places????️

Recognize places out of a picture, it is part of GoogleNet

Nudity Decency????️

This one give you a score of how appropriate this picture is regarding to nudify.

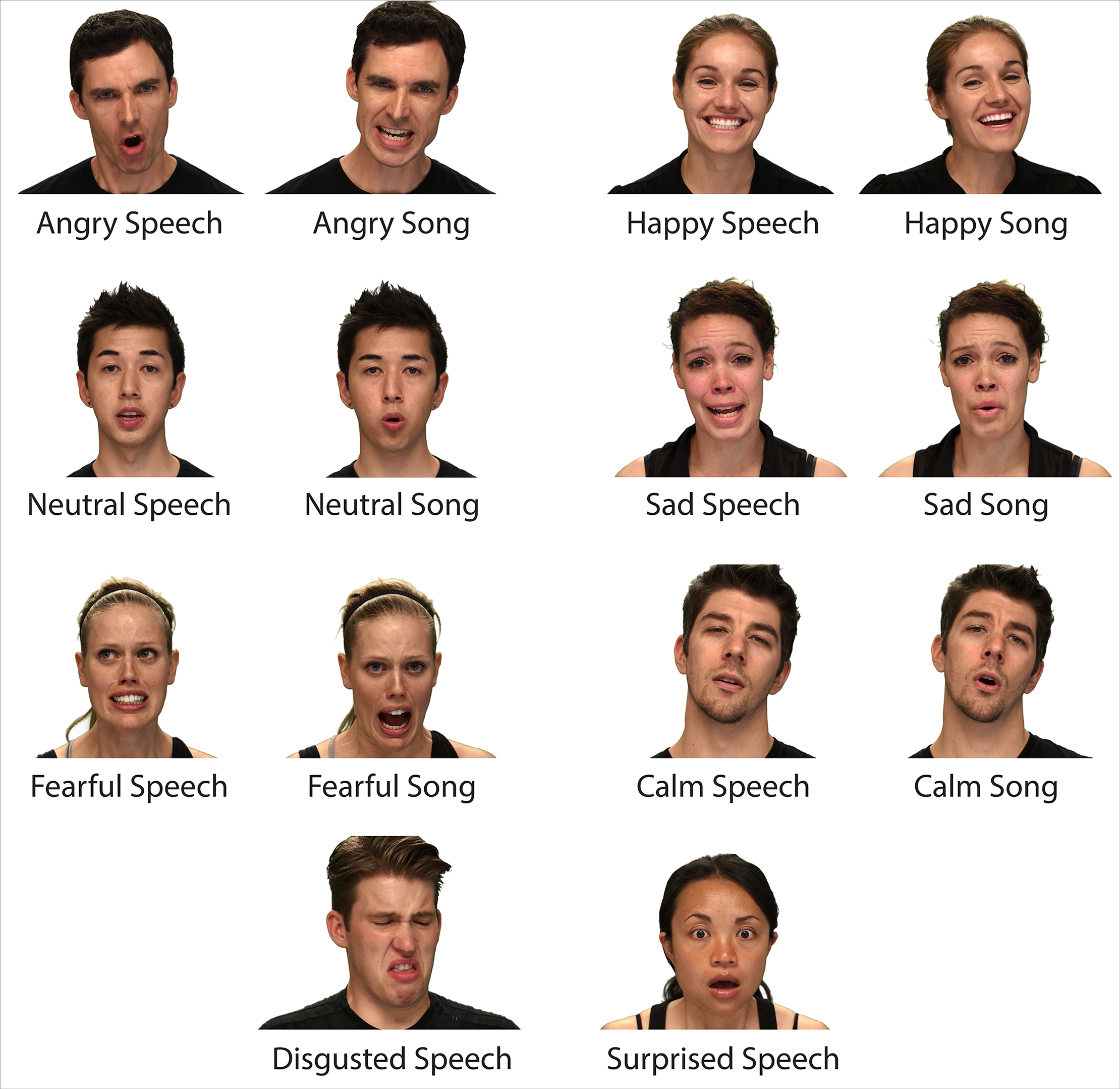

Face Emotions ????️

This one does recognize face emotions, using a different structure of network, I use it to validate the results of CNNEmotions.

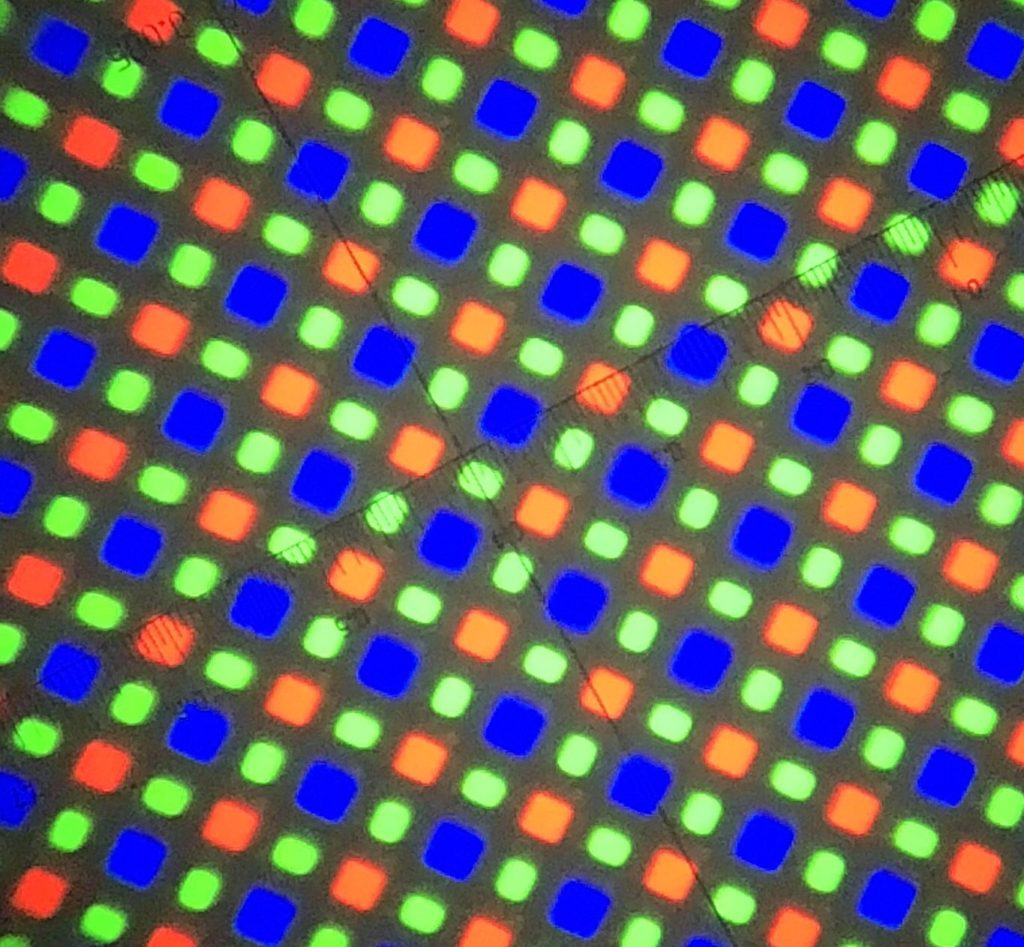

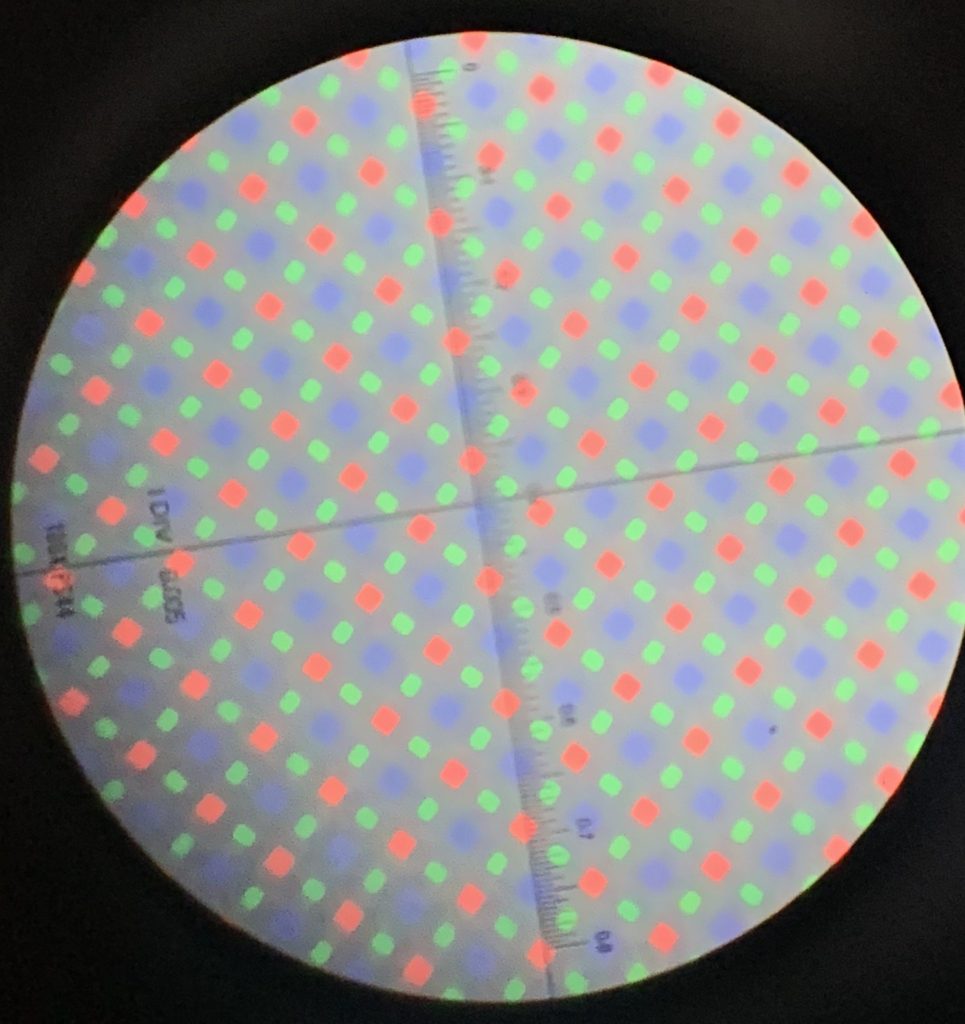

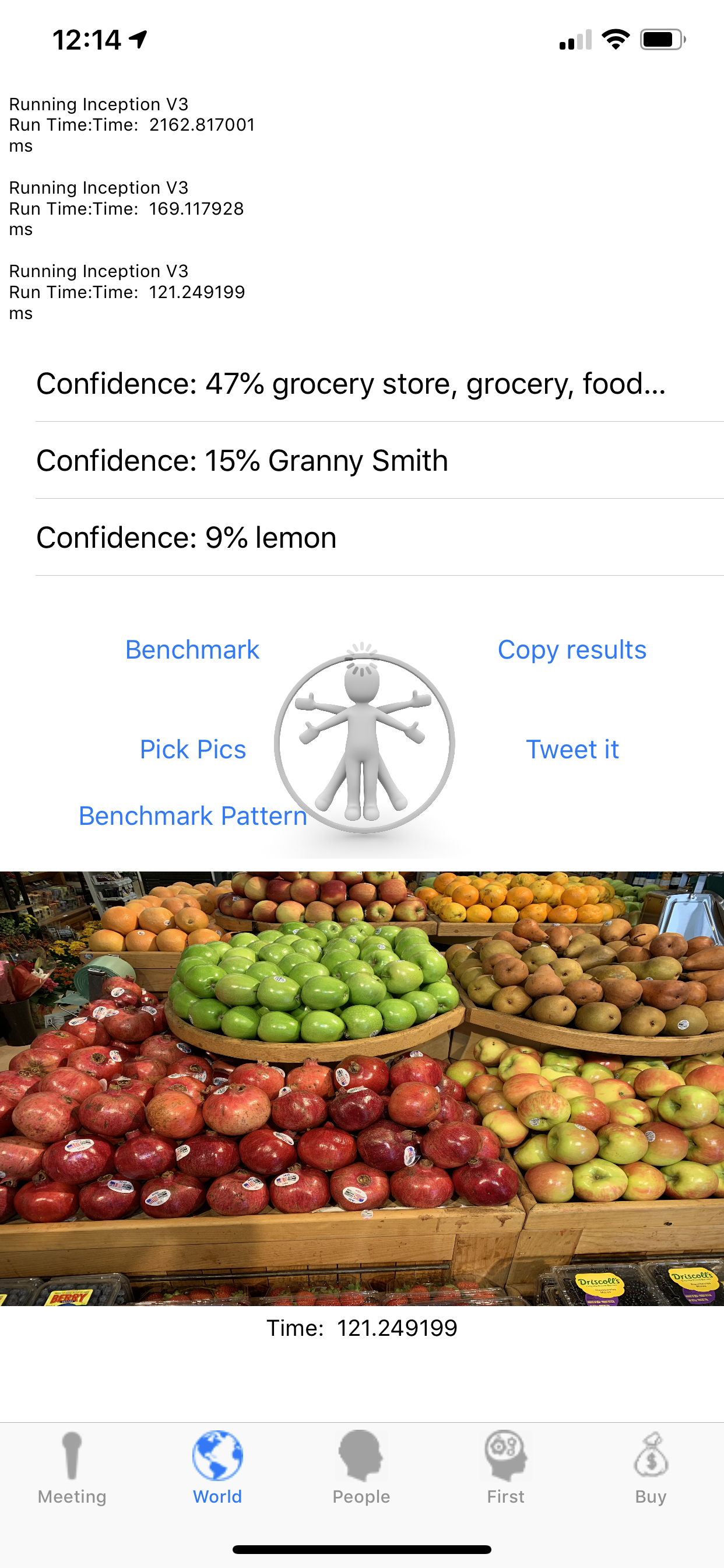

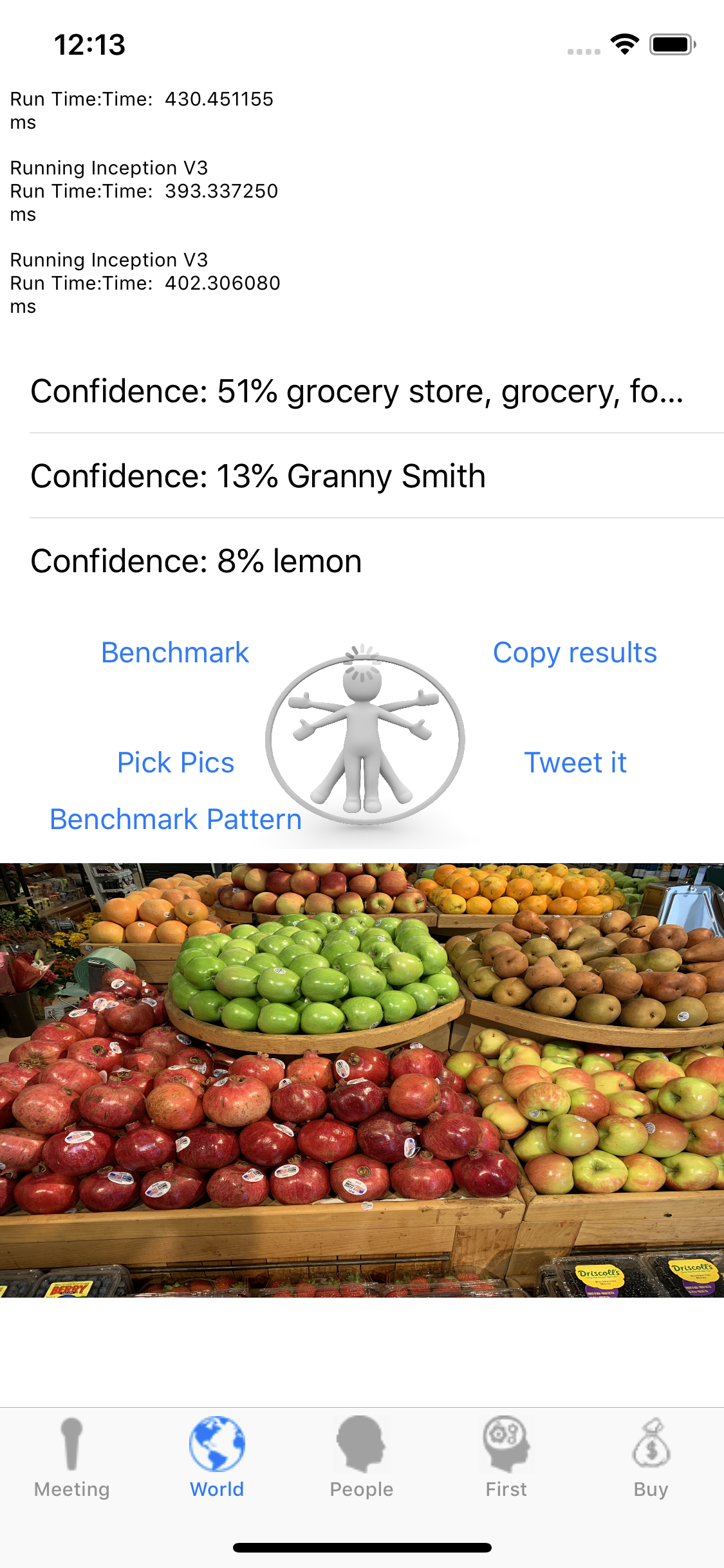

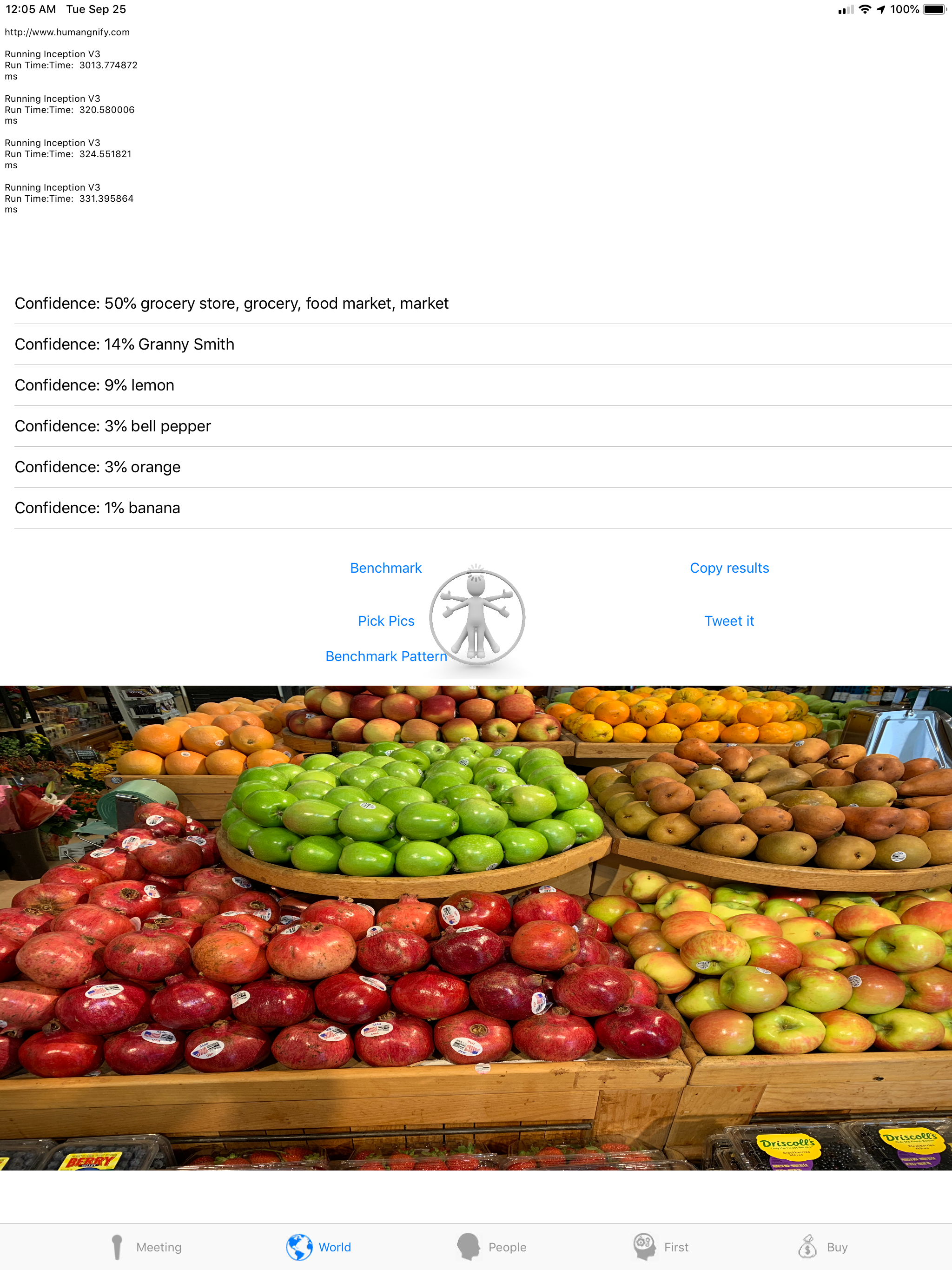

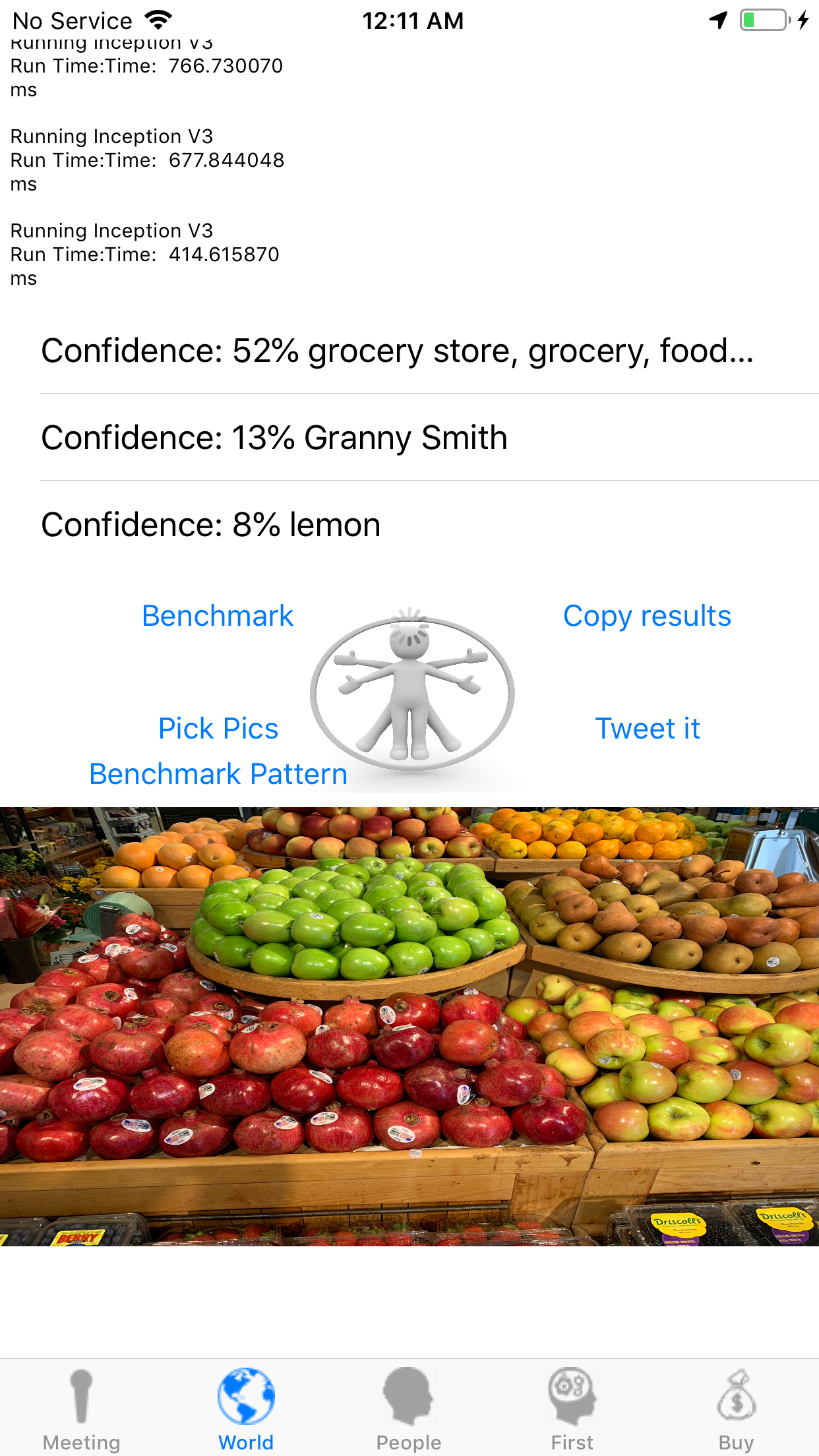

Inception V3 ????️

A legend in picture machine learning, it does qualify objects in the picture, it is never too far from the best of art. This version comes from googleNet.

Sentiment Polarity ????

It is a #DNLP, tells you , out of your texts, the sentiment convey by a sentence.

Tweet Personal Attack detector????

Detect agreesivity toward the reader, in House developped.

DeepSpeech Voice Recognition ????

The famous DeepSpeech, nodified and recoded to work with iPhone DSP and Apple convolution neuronal network engine. It recognizes pretty accurately what you say, while not buring your battery down fast. Tuned in house, and used to index what ever you want, your meeting, your day, your life …

Speaker Recognition ????

Detect when the people speaking change to an other person, learn the new person on the fly, and then, generate a time table of different speakers in a recorded audio. It allows humangnify to provide you a perfectly indexed meeting.

Insult Detector ????

This one is trivial, it detecting insults, it is home made, using a regular #DLNP, it takes a text and score it from 0 to 100, zero is polite text, 100 is seriously deep disgusting bad English language.